Spotlight on New Features

Our teams are constantly working to bring the latest innovation into insurance and to empower you to be faster and more informed then ever. In this release we are focusing on knowledge and insight into our AI. Here is what has changed in the new release…

Annotation Analysis

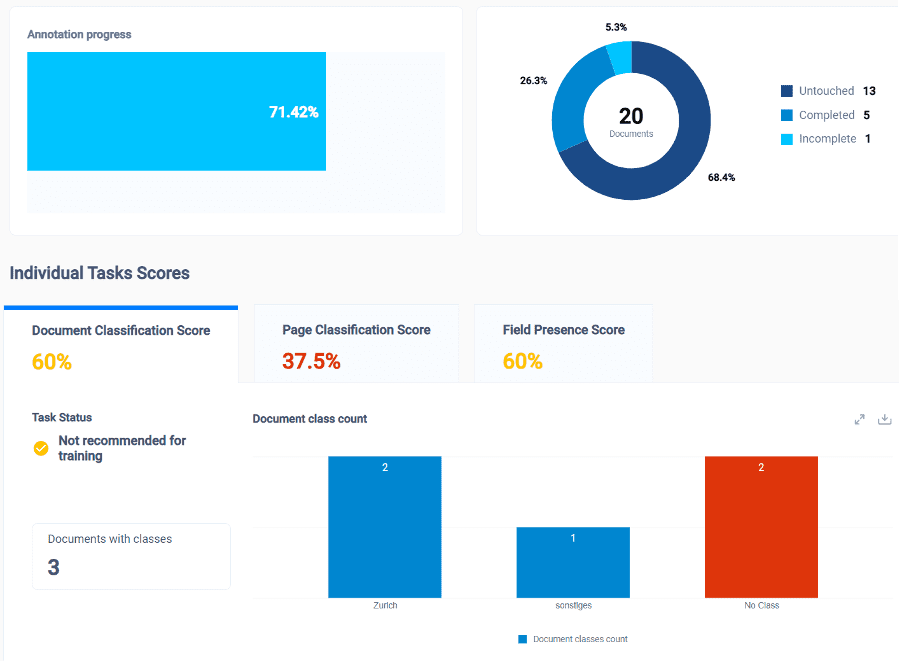

The omni:us Trainer – Annotation Analysis service provides a detailed analytical report on the status and quality of annotation, so that you can increase the AI Model performance by improving your annotation or ground-truthing quality, if required. You can select an AI Project and relevant associated collections to view the percentage of documents which are partially annotated, not annotated, or completely annotated.

The Individual Tasks Scores section displays four tabs containing a detailed report of the annotation quality with color-coded percentage values indicating the document classification score, page classification score, field presence score, and field transcription score. Based on the percentage values for each task, you can view an assessment of whether the individual tasks are ready for AI training or not. For example, if more than 80% of the documents have document classes assigned, the document classification score is green, and the task status is shown as “Ready for Training.”

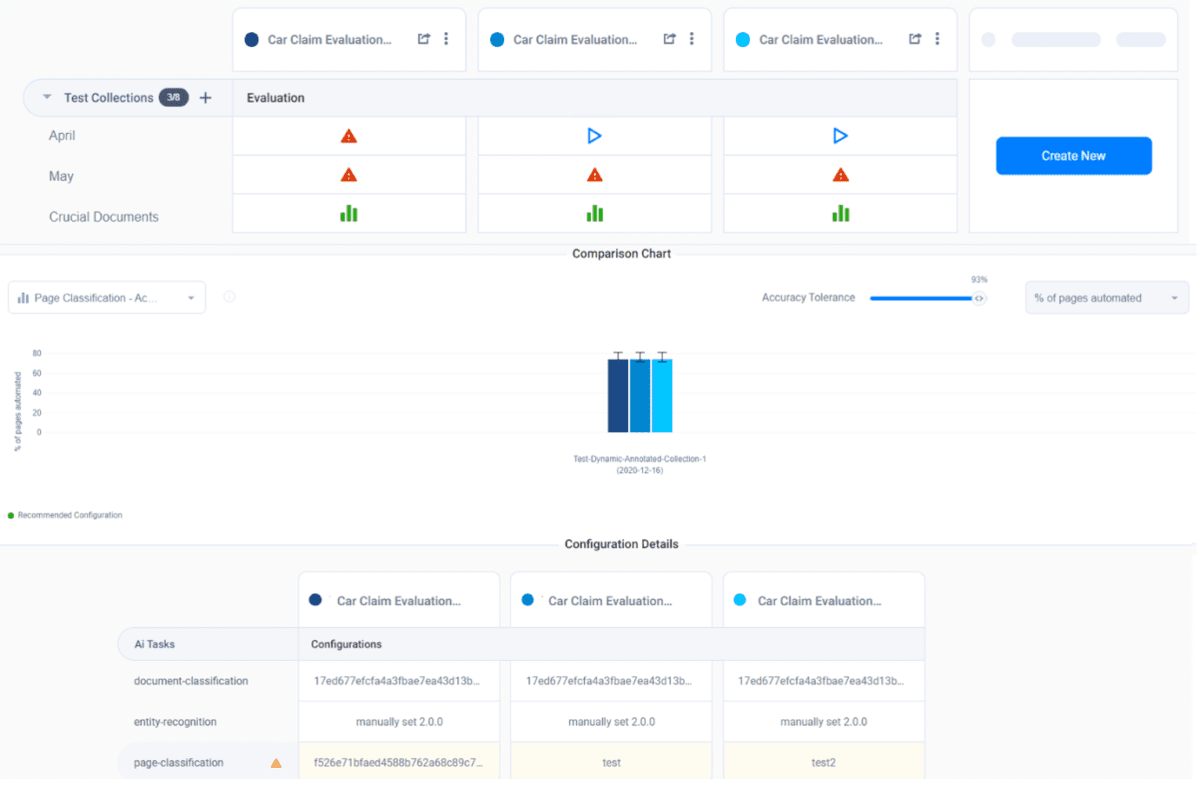

Prediction Evaluation And Comparison

The omni:us Trainer – Prediction Evaluation service enables you to understand the end-to-end performance of a combination of machine learning models or AI Models for a configured pipeline that you later want to deploy for document processing. You can view the in-depth performance analysis of the AI Models on a selected benchmark data set (Test Collection). You can create a pipeline configuration by selecting specific AI Models whose performance you want to compare for each AI Task. You can view the Evaluation Report of each AI Pipeline in terms of which models are performing at an expected accuracy rate for information extraction, document classification, page classification, or character error rate. You can view a comparison table displaying the performance metrics of up to five AI Pipelines for each evaluation parameter. As a result of this comparison of different AI Models for the same AI Task on an identical benchmark set, you can understand the best performing AI Models and deploy them for document processing.

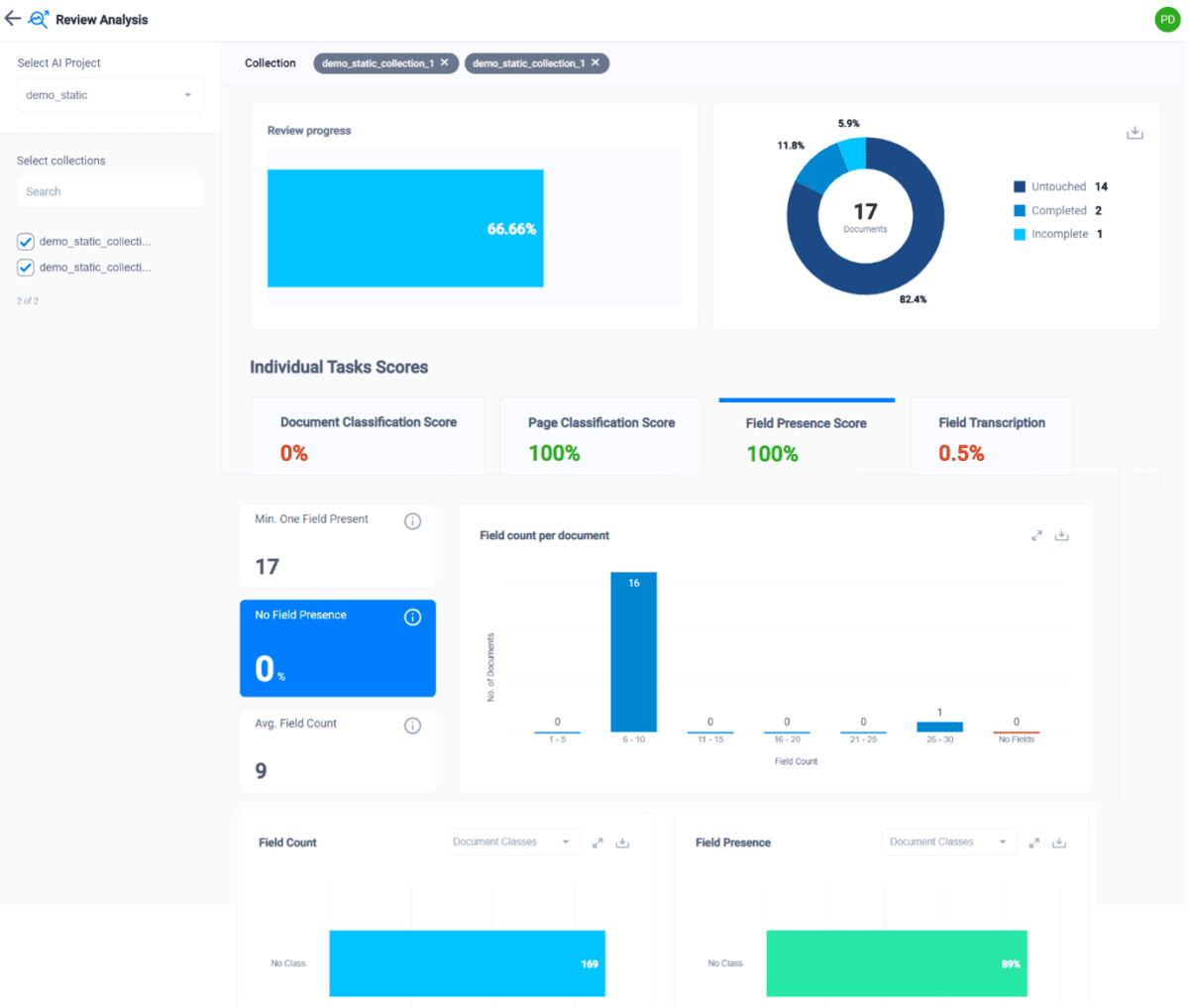

Review Analysis for Engine

The omni:us Engine – Review Analysis service provides a detailed analytical report on the status and quality of document review. You can select an AI Project and relevant associated collections to view the percentage of documents which are partially reviewed, not reviewed, or completely reviewed.

The Individual Tasks Scores section displays four tabs containing a detailed report of the review quality with color-coded percentage values indicating the document classification score, page classification score, field presence score, and field transcription score.

Enhancement

Team Lead Able to Delete Documents From the Document Explorer

The Team Lead user role can delete any documents from the Document Explorer screen in the Trainer or Engine, irrespective of the current status of the document.

___

for queries or comments, reach us at [email protected]

___